The tricky part of implementing this was that the Quake BSP format doesn't store redundant information. Although it contains the file offsets for the lightmaps, it doesn't have the dimensions of each one. The renderer must compute these by walking the vertices of each polygon face, converting the coordinates to texture space, and finding the bounding rectangle. As with the texture data, I packed these into a single atlas texture and stored the coordinates as vertex attributes.

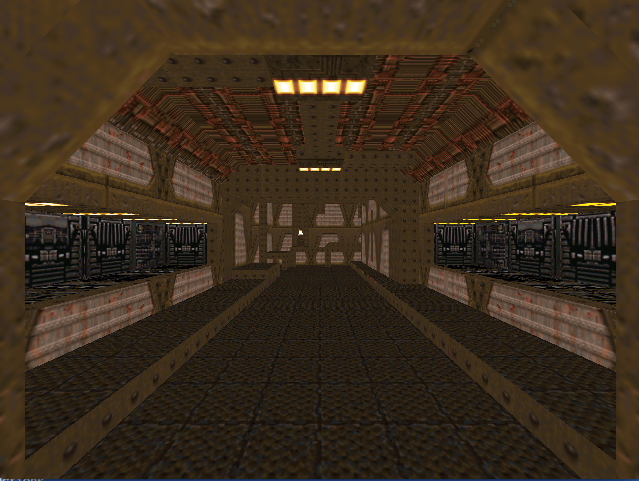

Here's a rendered frame with only texture mapping, no lighting:

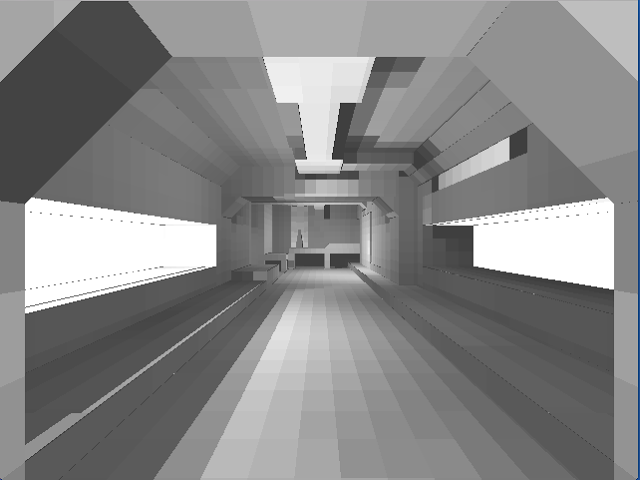

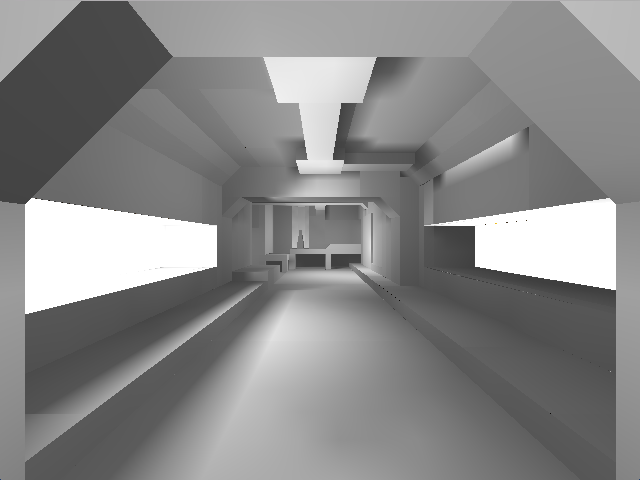

Here are the same lightmaps with bilinear filtering enabled on the texture sampler, which removes the sharp edges.

Now that I have the lightmap data available, I need to multiply it by each pixel in the texture. When id added hardware acceleration to Quake, desktop GPUs didn't support programmable shaders, so they needed some machinations to support lighting. Since my rendering engine does support shaders, the implementation is simpler. The pixel shader is below, with new code bolded. As described in previous posts, this works on 16 pixels at a time.

void shadePixels(vecf16_t outColor[4],

const vecf16_t inParams[16],

const void *_castToUniforms,

const Texture * const sampler[kMaxActiveTextures],

unsigned short mask) const override

{

vecf16_t atlasU = wrappedAtlasCoord(

inParams[kParamTextureU - 4],

inParams[kParamAtlasLeft - 4],

inParams[kParamAtlasWidth - 4]);

vecf16_t atlasV = wrappedAtlasCoord(

inParams[kParamTextureV - 4],

inParams[kParamAtlasTop - 4],

inParams[kParamAtlasHeight - 4]);

sampler[0]->readPixels(atlasU, atlasV, mask, outColor);

vecf16_t lightmapValue[4];

sampler[1]->readPixels(

inParams[kParamLightmapU - 4],

inParams[kParamLightmapV - 4],

mask,

lightmapValue);

vecf16_t intensity = lightmapValue[0] + splatf(0.4);

outColor[kColorR] *= intensity;

outColor[kColorG] *= intensity;

outColor[kColorB] *= intensity;

}

In the code above, I've added two new interpolated parameters: the lightmap U and V coordinates. I don't need to worry about repeating lightmaps as I do for the textures. My texture sampler only supports 32-bit textures now, so I use the lowest channel for the intensity and ignore the others. I add a constant ambient value to the lightmap sample--it was too dark otherwise--and multiply that intensity by the texel color.

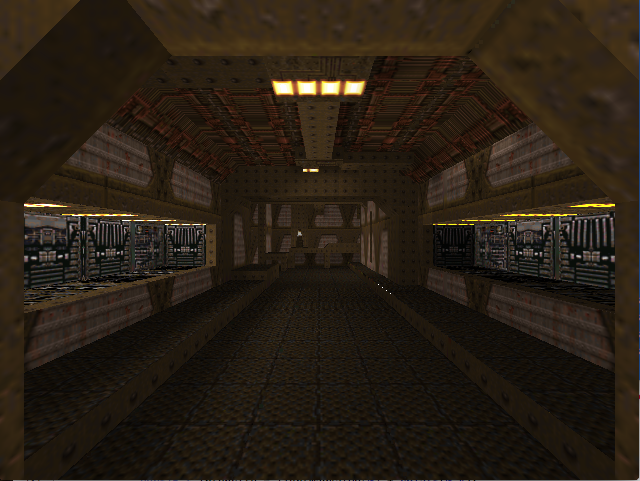

Here is the original scene with the lightmap applied: The effect is subtle, but looks much more realistic.

The four white dots on the right are a gap where the triangles don't fit together tightly. This is probably an issue in my rasterizer.

It also requires more instructions: about 30 million to render the first frame compared to 22 million without lightmaps. Two of the biggest contributors to the profile in the previous post were parameter interpolation and texture sampling, both of which lightmaps require. I could improve this by enabling an 8-bit texture format for the lightmap, which would remove the redundant computations for the unused color channels.

No comments:

Post a Comment